Research projects

Ongoing research projects

Past research projects

|

Analysis of Code Repositories(Own Funds) Project leader: Abstract:Software developers often modify their projects in a similar or repetitive way. The reasons for these changes include the adoption of a changed interface to a library, the correction of mistakes in functionally similar components, or the parallelization of sequential parts of a program. If developers have to perform the necessary changes on their own, the modifications can easily introduce errors, for example due to a missed change location. Therefore, an automatic technique is desireable that identifies similar changes and uses this knowledge to support developers with further modifications. Extraction of Code-Changes Symbolic Execution of Code-Fragments In 2015 SYFEX was refined and applied to code fragments from the repositories of different software projects. In 2016 we investigated to which extend SYFEX can be used to gauge the semantic similarity of submissions for a programming contest. In 2017 and 2018 we optimized the implementation of SYFEX. We also began collecting a data set of semantically similar methods from open source repositories. We published this data set in 2019.

Techniques for symbolic execution use algorithms to check the satisfiability of logical/mathematical expressions in order to detect valid execution paths in a program. Usually, these algorithms account for a large part of the total runtime of a symbolic execution. To accelerate this satisfiability check, we experimented with a technique to replace complicated expressions with simpler equivalent expressions. These simpler expressions are obtained by using program synthesis. In the year 2020, we extended this program synthesis with a novel technique that can quickly detect whether a fixed set of operations can be used to construct an expression that is equivalent to the complicated expression. We published this approach in 2021 and were able to show that the technique can reduce the runtime of common program synthesizers by 33% on average. We subsequently extended this technique to other classes of program synthesis problems. In 2022, we performed a comprehensive evaluation of these extensions. This evaluation showed that these extensions similarly improve the runtime of program synthesizers on a larger class of program synthesis problems. We completed the work on unrealizability detectors for bit vector program synthesis in 2023 and described it in detail in a Dissertation.

Detection of Semantically Similar Code Fragments We further improved the implementation of this approach in 2017 and 2018. Additionally, we focused on evaluating the approach with pairs of methods from software repositories and from programming exercises. Moreover, we created a data set of semantically similar methods from open-source software repositories that we published in 2019.

Techniques for symbolic execution rely on algorithms to detect the satisfiability of logic/mathematic expressions. These are used to detect whether an execution path in a program is feasible. The algorithms often use a large amount of the total computation time. To improve the speed of this satisfiability check, in the years 2019 and 2020 we experimented with a technique to replace complicated expressions with simpler expressions that have the same meaning. These simpler expressions result from the application of program synthesis. In 2020 we augmented the program synthesis with a novel approach to detect beforehand if some operations can form an expression with the same meaning as a more complicated expression.

Semantic Code Search Clustering of Similar Code-Changes Publications:

|

|

Automatic Detection of Race-Conditions(Own Funds) Project leader: Abstract:Large software projects with hundreds of developers are difficult to review and often contain many bugs. Automatic tests are a well established technique to test sequential and deterministic software. They test the whole project (system test) or each module by itself (unit test). However, recent software contains more and more parallelism. This introduces several new bug patterns, like deadlocks and concurrent memory accesses, that are harder or even impossible to be detected reliably using conventional test methods. Whether the faulty behavior actually shows at runtime depends on the concrete scheduling of the threads which is indeterministic and varies between individual executions depending on the underlaying system. Due to this unpredictable behavior such bugs do not necessarily manifest in an arbitrary test run or may never arise in the testing environment at all. As a result, conventional tests are not well suited for modern, concurrent software. In 2016 existing approaches were studied regarding their usability as a starting basis for our project. The most promising method uses model checking and predefined assertions to construct thread schedules that trigger the faulty behavior. However, the approach is currently infeasible for larger projects because only very small codes could be analyzed in reasonable time. Therefore, we focused on automatically detecting and removing statements that are unrelated to the parallelism respectively to the potentially faulty code parts in order to decrease the execution time of the preliminary static analysis. In 2017 the work on automatically reducing programs to speed up furher analysis was continued. Furthermore, we evaluated whether the concept of mutation testing can be applied to parallel software as well. The results indicate that this extension is indeed possible to rate tests qualitativly. However, to complete the analysis for larger programs in reasonable time, a few heuristics need to be applied during the process. In 2018 the focus moved to a deterministic execution of test cases. A concept to reproduce results during the execution was developed: In addition to the test case, a schedule specifies the dynamic behaviour of the threads. Instrumenting the code at previously marked positions and other relevant byte code instructions allows a separate control thread to enforce the schedule. When modifying the source code, the marked positions in the code need to be updated as well to keep them consistent with the test cases. A merging technique similar to the ones used in version control systems shall be used to automatically update the positions. Up to 2019, this project was a contribution of the Chair of Computer Science 2 (Programming Systems) to the IZ ESI (Embedded Systems Initiative, http://www.esi.fau.de/ ). In this context, several improvements for the quality of concurrent software were analyzed. The take-away result was that different approaches are applicable and required, but they also often suffer from long analysis times.

Beyond the ESI project, we improved the usefulness of mutation testing by developing a tool for equivalence detection and test case generation. A submitted paper got accepted.

In 2020, we studied and evaluated approaches to detect external race conditions. Whereas in classic race conditions, several threads of the analyzed software fail to work together properly, in external race conditions, software interacts with independent, unknown components. Examples are other programs, the operating system, or even malicious code written by attackers who interferes with the analyzed software.

In 2021, we developed a system to statically detect race conditions when using external resources. A common pattern is to check properties of files and later access the files again, assuming the previously determined properties still hold. However, if the file is modified in the meantime, numerous problems can occur (time-of-check to time-of-use). Besides unexpected result, attackers can even modify the file to enforce malicious behavior and compromise the system. With our approach, such vulnerable codes can be detected in the software.

Publications:

|

Automatic Code Parallelization at Runtime(Own Funds) Project leader: , Abstract:Our automatic parallelization efforts are currently focused on dynamic parallelization. While a program is running, it is analyzed to find loops where parallelization can help performance. Our current idea is to run long-running loops three times. The first two runs analyze the memory accesses of the loop and can both run in parallel. The first run stores in a shared data structure for every memory address in which loop iteration a write access happens. We do not need any synchronization for this data structure, only the guarantee that one value is written to memory, when two concurrent writes happen. In the second pass we check for every memory access, if it has a dependency to one of the stored write accesses. A write access is part of any data-dependency, so we can find all types of data dependencies. If we do not find any, the loop is actually run in parallel. If we find dependencies, the loop is executed sequentially. We can execute the analyses in parallel to a modified sequential execution of the first loop iterations. In 2014 we enhanced the analysis so that a loop can start running while the remainder of the loop is analyzed to see if it can be run in parallel. To allow the sequential loop to execute while the tail of the loop is analyzed we needed to instrument the sequential loop slightly. The result is that the loop runs only slightly slower if the loop cannot be parallelized, but if the loop is found to be parallelizable, speedup is near to linear. Finally, we also created a new language that uses the above library for run-time parallelization. Any loops that the programmer marked as candidates for run-time parallelization are analyzed for constructs that the library cannot yet handle. If the loop is clean, code is generated that uses the library's macros. The project is a contribution of the Chair of Computer Science 2 (Programming Systems) to the IZ ESI (Embedded Systems Initiative, http://www.esi-anwendungszentrum.de/) |

|

Design for Diagnosability(Third Party Funds Single) Project leader: Abstract:Many software systems behave obtrusively during the test phase or even in normal operation. The diagnosis and the therapy of such runtime anomalies is often time consuming and complex, up to being impossible. There are several possible consequences for using the software system: long response times, inexplicable behaviors, and crashes. The longer the consequences remain unresolved, the higher is the accumulated economic damage. Before we have successfully completed the project in July 2016, we have made the following contributions:

Although funding expired in 2016, we made further contributions in 2017:

We continued to make further contributions to the research project in 2018:

External Partners:

|

|

Adaptive Algorithms for RF-based Locating Systems(Third Party Funds Single) Project leader: Abstract:The goal of this project is the development of adaptive algorithms for radio-based realtime locating systems. In the scope of this project we cover three essential topics: Automatic configuration of event detectors. In previous research projects we built the basics for the analysis of noisy sensor data streams. However, event Evaluation of machine learning techniques for locating applications. In previous research projects we already developed machine learning algorithms for radio-based locating systems (e.g., evolutionary algorithms to estimate antenna positions and orientations). This work package investigates further approaches that use machine learning to enhance the performance of realtime locating systems. Evaluation of vision-based techniques to support radio-based realtime locating. |

Embedded Realtime Language Development Framework(Own Funds) Project leader: Abstract:ErLaDeF is our test-bed for new programming language and compiler techniques. Our main focus is on building infrastructure for easier (hard + soft) real-time embedded parallel systems programming. Runtime Parallelization of Programs Our automatic parallelization efforts are currently focused on dynamic parallelization. While a program is running, it is analyzed to find loops where parallelization can help performance. Our current idea is to run long-running loops three times. The first two runs analyze the memory accesses of the loop and can both run in parallel. The first run stores in a shared data structure for every memory address in which loop iteration a write access happens. We do not need any synchronization for this data structure, only the guarantee that one value is written to memory, when two concurrent writes happen. In the second pass we check for every memory access, if it has a dependency to one of the stored write accesses. A write access is part of any data-dependency, so we can find all types of data dependencies. If we do not find any, the loop is actually run in parallel. If we find dependencies, the loop is executed sequentially. We can execute the analyses in parallel to a modified sequential execution of the first loop iterations.In 2013 we have explored alternatives to polymorphism and inheritance that may be easier to analyze. We have also examined alternative thread synchronization methods, for example transactional memory, implicit synchronization, remote procedure calls, etc.In 2014 we enhanced the analysis so that a loop can start running while the remainder of the loop is analyzed to see if it can be run in parallel. To allow the sequential loop to execute while the tail of the loop is analyzed we needed to instrument the sequential loop slightly. The result is that the loop runs only slightly slower if the loop cannot be parallelized, but if the loop is found to be parallelizable, speedup is near to linear.Finally, we also created a new language that uses the above library for run-time parallelization. Any loops that the programmer marked as candidates for run-time parallelization are analyzed for constructs that the library cannot yet handle. If the loop is clean, code is generated that uses the library's macros. Pylon is a language that is close to scripting languages (but is statically typed). A large part of the complexity that a programmer would normally take care of when creating an application, is moved to the compiler (i.e., type inference). The programmer does not have to think about types at all. By analyzing the expressions in the program, types are inferred (duck typing). The language is also implicitly parallel, the programmer does not need to have expert knowledge to parallelize an application. The compiler automatically decides what to run in parallel. Finally, the language is kept simple so that learning the language remains easy for novice programmers. For example, we kept the number of keywords small. Our second approach to bring compiler analysis to interactive speed is to make the analysis itself parallel. In 2013 we continued to develop data-parallel formulations of basic compiler analyses. We have started to implement a generic data-parallel predicate propagation framework. Its data-parallel forms are then portably executable on many different multi-core architectures.Object-oriented languages offer the possibility to dynamically allcoate objects. The memory required for this is allocated at run-tie. However, in contrast to desktop systems, embedded systems typically have very little memory. If the 'new' operator is used often in an embedded system (that are now starting to be programmed with higher-level languages such as Java and C++ that include 'new'), memory can be exhausted at run-time causing an embedded system to crash.In 2014, we created an analysis to find this problem at compile-time and report this to the developer. |

|

Efficient Software Architectures for Distributed Event Processing Systems(Third Party Funds Single) Project leader: Abstract:Localization Systems (also known as Realtime Location Systems, or RTLS) become more and more popular in industry sectors such as logistics, automation, and many more. These systems provide valuable information about whereabouts of objects at runtime. Therefore, processes can be traced, analyzed, and optimized. Besides the research activities at the core of localization systems (like resilience and interference-free location technologies or methods for highly accurate positioning), algorithms and techniques emerge that identify meaningful information for further processing steps. Our research focuses on automatic configuration methods for RTLSs as well as on the generation of dynamic motion models and techniques for event processing on position streams at runtime. In 2011, we investigated whether events can be predicted after analyzing and learning event streams from the localization system at runtime. As a result, we are able to deduce models that represent the information buried in the event stream to predict future events. We developed several methods and techniques in 2012 that process and detect events with low latency. Events (composite, complex) can be detected by means of a hierarchical aggregation of sub-events that themselves are detected by (several) event detectors processing sub-information in the event stream. This greatly reduces the complexity of the detection components and renders them fully maintainable. They even can use parallel or distributed cluster architectures more efficiently so that important events can be detected within a few milliseconds. In 2013 we further minimized detection latency in distributed event-based systems: first, a new migration technique modifies and optimizes the allocation of software components in a networked environment at runtime to minimize networking overhead and detection latencies. Second, a speculative event processing technique uses conservative buffering techniques to exploit available system resources. We also created and published a representative data set (consisting of realtime position data and event streams) and a corresponding task description. In 2014 we investigated fundamental approaches zu handle uncertainties (both w.r.t. the definition of event detectors and to the events). We implemented a promising prototype of an event-based system that is no longer deterministic but instead evaluates several possible system states in parallel to achieve a detection with a much higher robustness and correctness. The domain expert can parameterize the event detectors by attaching probabilities or probability functions to the generated events. In 2015 we improved, optimized and published our approach. Furthermore we started to investigate approaches to learn optimal parameter sets for the event detectors. Thus, a manual adjustment and tuning of parameters (like thresholds) becomes unnecessary. The project is a contribution of the Programming Systems Group to the IZ ESI http://www.esi.uni-erlangen.de/ |

|

Computer Science basics as an essential building block of modern STEM field curricula(Third Party Funds Single) Project leader: , , Abstract:The increasing digitalization of all areas of science and life render competencies in the foundations of computer science essential for all tech students and more. For the success of their academic studies, often their courses, especially the introductory ones, are problematic hurdles that may lead to a dropout. |

|

Graphs and Graph Transformations(Own Funds) Start date: 01.10.2004 Abstract:Graphs are often used as an intuitive aid for the clarification of complex matters. Examples of outside computer science include, e.g., chemistry where molecules are modeled in a graphical way. In computer science, data or control flow charts are often used as well as entity relationship charts or Petri-nets to visualize software or hardware architectures. Graph grammars and graph transformations combine ideas from the fields of graph theory, algebra, logic, and category theory, to formally describe changes in graphs. Category theory is an attractive tool for the description of different structures in a uniform way, e.g., the different models for asynchronous processes: Petri-Nets are based on standard labeled graphs, state charts use hierarchical graphs, parallel logic programming can be interpreted in a graph-theoretical way using so-called jungles, and the actor systems can be visualized as graphs, whose labeling alphabet is a set of term graphs. Lately, we have concentrated our attention on a theoretical aspect. Our work on graph transformation is based on notions borrowed from category theory. The so-called double-pushout approach represents a production by two morphisms starting at a common interface graph. One pushout glues the left-hand side of the production into the context, the other does with the right-hand side. Effectively constructing a derivation step, however, requires finding a pushout complement on the left-hand side. Some people consider this disadvantageous. In 1984, Raoult has proposed to model graph rewriting by a single pushout; Loewe has extensively studied this approach, but the discussion was mainly restricted to injective morphisms. Under this assumption, the approaches are equivalent. Some relevant applications such as term graph rewriting, however, lead to non-injective morphisms. We have examined these cases in detail, and we could show that the equivalence also holds for non-injective cases as long as the handle satisfies some reasonable conditions. |

|

Cooperative Exploration and Analysis of Software in a Virtual/Augmented Reality Appliance(Third Party Funds Group – Sub project) Overall project: Cooperative Exploration and Analysis of Software in a Virtual/Augmented Reality Appliance Abstract:

Understanding software has a large share in the programming efforts of a software systems, up to 30% in development projects and up to 80% in maintenance projects. Therefore, an efficient and effective way for comprehending software is necessary in a modern software engineering workplace. Three-dimensional software visualization already boosts comprehension and efficiency, so utilization of the latest virtual reality techniques seems natural. Within the scope of the Holoware project, we create an environment to cooperatively explore and analyze a software project using virtual/augmented reality techniques as well as artificial intelligence algorithms. The software project in question is being visualized in said virtual reality, such that multiple participants can simultaneously explore and analyze the software. They can cooperate by communicating about their findings. Different participants benefit from different perspectives on the software, which is augmented by domain specific additional information. This provides them with intuitive access to the structure and behaviour of the software. Various use cases are possible, for example the cooperative analysis of a run time anomaly in a team of domain experts. The domain experts can see the same static structure, augmented with domain specific and detailed information. In the VR environment, they can share their findings and cooperate using their different expertise.

In addition, the static and dynamic properties of the software system are analyzed. Static properties include source code, static call relationships or metrics such as LoC, cyclomatic complexity, etc. Dynamic properties can be grouped into logs, traces, runtime metrics, or configurations that are read in at runtime. The challenge lies in aggregating, analyzing, and correlating this wealth of information. An anomaly and significance detection is developed that automatically detects both structural and runtime anomalies. In addition, a prediction system is set up to make statements about component health. This makes it possible, for example, to predict which components are at risk of failing in the near future. Previously, the log entries were added to the traces, creating a detailed picture of the dynamic call relationships. These dynamic relationships are mapped to the static call graph because they describe calls that do not result from the static analysis (for example, REST calls across several distributed components).

In 2018, the following significant contributions have been made:

In 2019 we achieved the following improvements:

Our paper "Towards Collaborative and Dynamic Software Visualization in VR" has been accepted for publication at the International Conference on Computer Graphics Theory and Applications (VISIGRAPP) 2020. It presents the efficiency of our prototype at increasing the software understanding process. In 2020, our paper "A Layered Software City for Dependency Visualization" was accepted at the International Conference on Computer Graphics Theory and Applications (VISIGRAPP) 2021 and later received the "Best Paper Award". We demonstrated that our Layered Layout for Software Cities simplifies the analysis of software architecture and outperforms the standard layout by far. We successfully concluded the research project with a final prototype and the resulting publications.

In 2021, after the end of the official project funding we were asked to submit an extended version of the award paper (" Static And Dynamic Dependency Visualization In A Layered Software City") for review to a journal. Here we present a night view of the city that shows dynamic dependencies as arcs. We thus addressed a central, yet remaining issue: the visualization of dynamic dependencies. In the paper "Trace Visualization within the Software. City Metaphor: A Controlled Experiment on Program Comprehension" at the IEEE Working Conference on Software Visualization (VISSOFT), we displayed dynamic dependencies within the Software City by means of light intensities and were able to show that this representation is more helpful than drawing all dependencies. Also for this paper, we were invited to submit an extended journal article "Trace Visualization within the Software City Metaphor: Controlled Experiments on Program Comprehension" for review. This article demonstrates an extended visualization of dynamic dependencies and color arcs based on HTTP status codes.

In 2022, both journal papers were accepted: "Static And Dynamic Dependency Visualization in a Layered Software City" is published in Springer Nature Computer Science Journal and "Trace Visualization within the Software City Metaphor: Controlled Experiments on Program Comprehension" was accepted for the Information and Software Technology Journal. For the finalization of Holoware, all extensions were combined into one single visualization. For this purpose, different views were applied, allowing the user to switch between them: in the day view, the software architecture can be analyzed in the novel Holoware layered layout, and in the night view, dynamic dependencies are displayed. As part of a master thesis, Holoware was also implemented as an AR visualization, so that it can easily be used as a showcase or in everyday work.

In mid 2023, we finalized the project with the dissertation "Visualizing the statics, dynamics and infrastructure of software using the city metaphor". It summarizes all investigated aspects: (a) the static structure of the system to understand the software architecture, (b) the dynamics of the system to understand the dynamic dependencies (e.g. modern microservice architectures), and (c) the infrastructure of the system to analyze costs and promote the understanding of software operation. We also uncovered another use case: the use of Holoware at trade fairs. The visualization of the software makes it easy to get into conversation with other software developers, as the visualized software can be discussed immediately. To this end, we simplified the setup of the AR and VR visualization so that Holoware can easily be started without a lot of prior technical knowledge. In addition, we improved the contrast of the visualization to make it easier to recognize outlines and arcs, especially in very bright lighting conditions.

External Partners:

Publications:

|

|

Incremental Code Analysis(Own Funds) Project leader: Abstract:To ensure that errors in a program design are caught early in the development process, it is useful to detect mistakes already during the editing of the code. For that the employed analysis has to be fast enough to enable interactive use. One method to achieve this is the use of incremental analysis, which combines analysis results of parts of the program to analyze the whole program. As an advantage, it is then possible to re-use large parts of the analysis results when a small change to the program occurs, namely for the unaffected parts of the program and for libraries. Thus the work required for analysis can be drastically reduced, which makes the analysis useful for interactive use. |

|

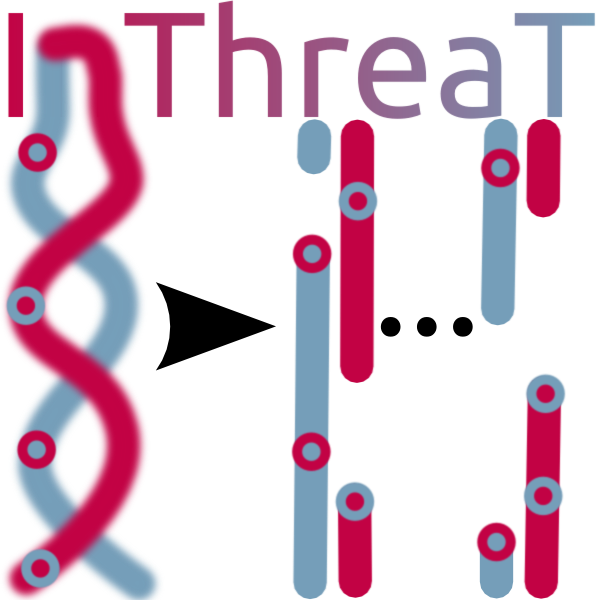

Inter-Thread Testing(Own Funds) Project leader: Abstract:In order to achieve higher computing performance, microprocessor manufacturers do not try to achieve faster clock speed anymore - on the contrary: the absolute number of cycles has even decreased, while the number of independent processing units (cores) per processor is continually increased. Due to this evolution, developers must learn to think outside the box: The only way to make their applications faster (in terms of efficiency) is to modularize their programs such that independent sections of code execute concurrently. Unfortunately, present-day systems have reached a level of functional complexity, such that even software for sequential execution is significantly error-prone - the parallelization for multiple cores adds yet another dimension to the non-functional complexity. Although research in the field of software engineering emerged several different quality assurance measures, there are still very few effective methods for testing concurrent applications, as the broad emergence of multi-core systems is relatively young. This project aims to fill that gap by providing an automated test system. First of all, a testing criteria hierarchy is needed, which provides different coverage metrics tightly tailored to the concept of concurrency. Whilst for example branch coverage for sequential programs requires the execution of each program branch during the test (i.e. making the condition of an if-statements both true and false - even if there is no explicit else branch), a thorough test completion criterion for concurrent applications must demand for the systematic execution of all relevant thread interleavings (i.e. all possibly occurring orderings of statements, where two threads may modify a shared memory area). A testing criterion defines the properties of the 'final' test set only, but does not provide any support for identifying individual test cases. In contrast to testing sequentially executed code, test scenarios for parallel applications must also comprise control information for deterministically steering the execution of the TUT (Threads Under Test). In 2012, a framework for Java has been developed, which automatically generates such control structures for TUT. The tester must provide the bytecode of his application only; further details such as source code or restrictions of the test scenario selection are optional. The approach uses aspect-oriented programming techniques to enclose memory access statements (reads or writes of variables, responsible for typical race conditions) with automatically generated advices. After weaving the aspects into the SUT (System Under Test), variable accesses are intercepted at runtime, the execution of the corresponding thread is halted until the desired test scenario is reached, and the conflicting threads are reactivated in the order imposed by the given test scenario. In order to demonstrate the functionality, some naive sequence control strategies were implemented, e.g. alternately granting access to shared variables from different threads. In 2013, the prototypical implementation of the InThreaT framework has been reengineered as an Eclipse plugin. This way, our approach can also be applied in a multi-project environment and the required functionality integrates seamlessly into the development environment (IDE) familiar to programmers and testers. In addition, the configuration effort required from the tester could be reduced, as e.g. selecting and persisting the interesting points of interleaving also became intuitively usable parts of the IDE. In order to automatically explore all relevant interleavings, we need an infrastructure to enrich functional test cases with control information, required to systematically (re)execute individual test cases. In 2013, such an approach for JUnit has been evaluated and implemented prototypically, which allows to mark individual test cases or whole test classes with adequate annotations. |

Integrated Tool Chain for Meta-model-based Process Modeling and Execution(Third Party Funds Single) Project leader: , Abstract:

As demands on the development of complex software systems are continuously increasing, compliance with well-defined software development processes becomes even more important. Especially large and globally distributed software development projects tend to require long-running and dynamically changeable processes spanning multiple organizations. In order to describe and support such processes, there is a strong need for suitable process modeling languages and for powerful support by tools. The results of a preceding cooperation project show that today's tools markets lack integrated tool chains which actually support the fine-grained and precise modeling of software development processes as well as their computer-aided execution, controlling and monitoring. A cooperation project has bridged this gap. This cooperation project was carried out together with develop group as an industrial partner and was funded by BMWi. It started in October 2008 and has been scheduled for three researchers. The project was finished in September 2011. The objective of this cooperation project was to prototype an integrated tool chain by using a rigorous, meta-model based approach that supports modeling, enactment, and execution of industrial software development processes. Bearing the applicability of such a tool in mind, this approach was mainly intended to provide a wide adaptability of process models to different industrial development scenarios, to define a user-friendly concept of process description and to establish an extensive computer-aided process execution support, contributing to the efficiency of development activities. These benefits were achieved by a high grade of formalism, by an integrated, generic concept of process modeling and process enactment and by using commonly accepted industrial standards (UML, SPEM). The integrated tool chain developed in this project is based on an extension of the SPEM standard (eSPEM - enactable SPEM). eSPEM adds a behaviour modeling concept by reusing UML activity and state machine diagrams. In addition, eSPEM provides behaviour modeling concepts that are specific to software development processes, for example, dynamic task creation and scheduling. In 2012, an overview of the tool chain and eSPEM has been presented at the "First Workshop on Academics Modeling with Eclipse" which was held in conjunction with the "8th European Conference on Modeling Foundations and Applications". In addition, practical experiences from modeling SDPs in industrial projects have shown a rising importance of standards and reference models which are subsequently summarized under the term quality standard. These quality standards are used to specify requirements for target-oriented and effective execution of software development projects. These requirements are thereby defined to address different goals related to e.g. quality and efficiency (Automotive SPICE, CMMI) or safety (ISO 26262 Road Vehicles - Functional Safety) aspects of SWDPMs (Software Development Process Models). In other words, these requirements - often described in terms of best practices - are imposed on the software process definition that is typically described by SWDPMs. Tracing these requirements to the process definition is a precondition for supporting efficient assessment activities and process improvement projects. An additional goal of this research project lies therefore in the integration of these quality standards with SWDPMs with a special focus on environments that requires conformance to more than one quality standard (e.g. CMMI, Automotive SPICE and ISO 26262).

|

|

Cluster and Grid computing made easy(Own Funds) Project leader: Abstract:Jackal is a project to create a distributed-shared-memory system for Java. This means that you can write a multi-threaded program (that you could run using normal JVMs on single machines as well) and deploy it on a cluster connected by a network. Jackal also sports a nice check-pointer so it can periodically write the program state to disk for fault tolerance. |

|

OpenMP/Java(Third Party Funds Single) Project leader: Abstract:JaMP is an implementation of the well-known OpenMP standard adapted for Java. JaMP allows one to program, for example, a parallel for loop or a barrier without resorting to low-level thread programming. For example: }

is valid JaMP code. JaMP currently supports all of OpenMP 2.0 with partial support for 3.0 features, e.g., the collapse clause. JaMP generates pure Java 1.5 code that runs on every JVM. It also translates parallel for loops to CUDA-enabled graphics cards for extra speed gains. If a particular loop is not CUDA-able, it is translated to a threaded version that uses the cores of a typical multi-core machine. JaMP also supports the use of multiple machines and compute accelerators to solve a single problem. This is achieved by means of two abstraction layers. The lower layer provides abstract compute devices that wrap around the actual CUDA GPUs, OpenCL GPUs, or multicore CPUs, wherever they might be in a cluster. The upper layer provides partitioned and replicated arrays. A partitioned array automatically partitions itself over the abstract compute devices and takes the individual accelerator speeds into account to achieve an equitable distribution. The JaMP compiler applies code-analysis to decide which type of abstract array to use for a specific Java array in the user’s program.

In 2010, the JaMP environment was extended to support the use of multiple machines and compute accelerators to solve a single problem. We have developed two new abstraction layers. The lower layer provides abstract compute devices which wrap around the actual CUDA GPUs, OpenCL GPUs, or multicore CPUs, wherever they might be in a cluster. The upper provides partitioned and replicated arrays. A partitioned array automatically partitions itself over the abstract compute devices and takes the individual accelerator speeds into account to achieve an equitable distribution. The JaMP compiler applies code-analysis to decide which type of abstract array to use for a specific Java array in the user’s program.

In 2012, we extended the JaMP framework to also handle Java objects on multiple ma- chines and accelerators (and not just arrays of primitive types). We added two different ways to handle objects. Standard shared objects are replicated on all compute devices. Arrays of objects are now also replicated or partitioned over the different devices. To increase the performance of the program, the framework has to break with Java’s semantics. Java’s object structure is mapped to a flat memory structure for the execution on the different devices.

In 2013, we examined how to better support Java objects in OpenMP parallel code, regardless of where the code is executed. We found that we needed to restrict the language slightly by forbidding inheritance of objects used in a parallel block. This ensures that the objects will not be of a different type than what is seen at compile time. We use this property to, for example, allow object inlining into arrays to occur naturally. With the added inlining, communication of objects and arrays over the network and to the compute devices was accelerated enormously, including a small performance increase on the devices themselves.

In 2014 we developed a JaMP implementation for Android 4.0. Currently this version only supports the SIMD construct of OpenMP.

In 2015 we added OpenMP tasks (OpenMP 3.0) to JaMP. This makes it possible to parallelize recursive algorithms with JaMP.

|

|

JavaParty(Own Funds) Project leader: Abstract:JavaParty [http://svn.ipd.uni-karlsruhe.de/trac/javaparty/wiki/JavaParty] allows easy porting of multi-threaded Java programs to distributed environments such as e.g. clusters. Regular Java already supports parallel applications with threads and synchronization mechanisms. While multi-threaded Java programs are limited to a single address space, JavaParty extends the capabilities of Java to distributed computing environments. |

|

Compiler-supported parallelization for multi-core architectures(Own Funds) Project leader: Abstract:Several issues significantly retard the development of quicker and more efficient computer architectures. Traditional technologies can no longer contribute to offer more hardware speed. Basic problems are the divergent ratio of the latencies of memory access and CPU speeds as well as the heat and waste of energy caused by increasing clock rates. Homogeneous and heterogeneous multi-core and many-core architectures were presented as a possible answer and offer enormous performance to the programmer. The multi-level cache hierarchy and decreased clock rates help avoid most of the above problems. Potentially, performance can increase even further by specialization of some hardware components. Current target architectures are GPUs with hundreds of arithmetic units and the Intel XeonPhi processor that provides 60 and more cores including hyper threading on a single board. While data parallel problems can be relatively simple accelerated by using the new hardware architectures, the implementation of task parallel problems is our main research focus. The difficulty is often the irregularity of the resulting task tree and thus the different task run times. From the point of view of a programming systems research group, there are - among others - the following open questions: Which core executes which work packet in which order? When do you donate a work packet from one compute node to another? Which data belongs to a work packet, are multiple cores/compute nodes allowed to access the data simultaneously? How do we have to merge data from multiple compute nodes? How can a compiler together with a runtime system create tasks and distribute work packets? In 2011, we have implemented and extended the Cilk programming model for the heterogeneous CellBE architecture (one PowerPC core (PPU) with eight SPU "coprocessors"). The CellBE architecture offers an enormous computing potential on a single chip. To move a work packet in the heterogeneous architecture, we have extended the Cilk programming model by an extra keyword. A source to source transformation then creates code for both, the PPU and SPU cores. Furthermore, we have moved the data along with the work packets in the SPU local stores and used a garbage collection technique to free memory from remote SPUs later. In 2012, we focused on graphic cards (GPUs) as a second target architecture. GPUs offer a lot more performance than ordinary CPUs, however achieving peak performance may be difficult. For data parallel problems, the performance can be achieved using Cuda (NVidia) or OpenCL (AMD) relatively easy. However, it is much more difficult to port task parallel problems with reasonable performance to the GPU, which is one of the goals on our roadmap. Thus, we design, implement and compare various load balancing algorithms. In 2012 we designed a first approach with hierarchical queues under the principle of work donation. In 2013, while further developing the load balancing algorithms for the GPU, we also targeted our work towards the Intel XeonPhi processor. With its many-core architecture and large register sets (and thus the ability to issue vector instructions on multiple data), the XeonPhi processor is a new challenge for load balancing algorithms. In practice, we extended and adopted Cilk for the XeonPhi such that we can automatically merge functions during the source-to-source transformation. This increases the Intel compiler{'}s chances to automatically parallelize. We implemented several analyses that not only increase the number of candidate functions for merging but also avoid (or at least handle) divergence in those merged functions. In 2014, we have extended our existing implementation for XeonPhi processors in a way that we can distribute the work over multiple XeonPhi processors. In contrast to the technique of work stealing that is used to distribute work over the many cores of a single XeonPhi, we use work donation to distribute the work to other XeonPhi processors. With a new source code annotation it is possible to mark the necessary data ranges for a work packet. These data ranges are then distributed along with the work packet and merged at synchronization points, which was the main challenge of the implementation. Furthermore, we have started to extend the clang compiler of the LLVM framework with support for Cilk in order to automatically generate CUDA code for execution on GPUs. Along with the generated CUDA code, we have designed a lightweight but general runtime system that manages execution and execution order of the work packets. We plan to implement analyses to avoid execution divergence as much as possible. In 2015, we evaluated and compared multiple load balancing algorithms to execute Cilk programs on the GPU. Therefore, we implemented queuing algorithms for parallel access and improved the automated generation of the necessary CUDA code. The correct placement of Cilk keywords for synchronization is still a challenge for the programmer. Thus, we generate from plain, recursive C code multiple, "plausible" code variants including synchronisation statements. These code variants will then be executed speculatively and the result from the fastest, correct variant will be used for further computations. Furthermore, the size of the base case of the recursion is crucial for an optimal performance improvement. Consequently, we started to optimize the size of the base case using analysis during compile and run time and will replace recursive calls with function inlining and vectorisation. |

|

Wireless Localization(Third Party Funds Single) Project leader: Abstract:Localization Systems (also known as Realtime Location Systems, or RTLS) become more and more popular in industry sectors such as logistics, automation and many more. These systems provide valueable information about whereabouts from objects at runtime. Therefore, processes can be traced, analyzed and optimized. Besides the research activities at the core of localization systems (like resilience and interference-free location technologies or methods for highly accurate positioning), there emerge algorithms and techniques to identify meaningful information for further processing steps. In this context, the aim of the wireless localization project is the research on automatic configuration methods for RTLSs as well as the generation of dynamic moving models and techniques for event processing on position streams at runtime. In 2009, we continued the development of our algorithms to estimate the receiving antenna's position (pose) of location systems. The algorithms estimate measuring points which allow a fast and accurate measurement pose. We applied an robot to execute the measurement automatically. The developed algorithm considers obstacles and the receiving characteristics of the location system and can sort out errors contained in the measurement data (e.g. multipath measurements). In 2010, models have been developed that can be used as dynamic moving models. Learning methods are applied to adapt the models at run-time. A formal language called TBL (Trajectory Behavior Language) was developed for describing trajectories. Additional algorithms can shrink the size of that description and hence compress the data size required to store trajectories. We are evaluating methods for learning the moving models online. These are evaluated in a study with respect to motion prediction. Moreover, it is being investigated whether events can be predicted by analyzing and learning event streams from the localisation system at runtime. External Partners:

|

|

Model Driven Component Composition(Third Party Funds Single) Project leader: Abstract:This project is part of the INI.FAU collaboration between AUDI AG and the University of Erlangen-Nuremberg. It examines model-driven ways to integrate vehicle functions on electronic control units (ECUs). Moreover, the project develops supporting methods and tools for this task. The insights gained in the course of this project will be practically validated by integrating a damper control system into an AUTOSAR ECU. In the automotive industry it is common practice to develop in-car-software on a high level of abstraction and in a model-based way. To eliminate uncertainties concerning resource consumption and runtime it is necessary to test the developed software on the target hardware as early as possible. But due to cost and safety requirements the integration of the software into an ECU is very time-consuming and demands special expertise going beyond that of the function developer. AUTOSAR (AUTomotive Open Systen ARchitecture) is on the way to become a standard for the basic software on ECUs. But due to the novelty of this standard there are neither processes nor tools to support the integration of the developed in-car-software into an ECU. In 2008, we have examined the modeling expressiveness of AUTOSAR with respect to both its applicability and possible conflicts with existing standards and technologies that are currently in use at Audi. Furthermore, the automatic generation of an AUTOSAR software architecture from a single damper control component has been implemented. Since 2009, a model-driven approach that supports the integration of software into an ECU is being implemented and integrated into the tool set used at Audi. In particular we are looking at the automatic configuration of the bus communication by means of a bus database and the automatic task scheduling among the application processes. The model-driven development, which in this case is based on the Eclipse Modeling Framework, will enable easier tayloring of the emerging prototype to changing requirements. In 2010, we exploited the information that is available in an AUTOSAR project to automatically configure local and remote communication between software components. We have also developed a genetic algorithm that uses dependency information to automatically generate task schedulings that minimize communcation latencies between cooperating software components. The existing prototype has been extended with the above-mentioned methods. |

|

Parallel code analysis on a GPU(Own Funds) Project leader: Abstract:In compiler construction there are analyses that propagate information along the edges of a graph and modify it, until a fix point is reached and the information no longer changes. In this project we built the ParCAn framework to accelerate such analyses by exploiting the massive parallelism of graphic cards.In 2016, our research focus was on synchronization mechanisms for GPUs. Known synchronization methods for CPUs (e. g. a spin lock) cannot be used without further adjustment on GPUs since their special architectural properties easily lead to dead- and livelocks. Synchronization is required (even for predominantly dataparallel graph implementations) if data dependences occur dynamically. We have therefore developed a novel synchronization mechanism which solves two non-trivial problems related to GPUs: First, we prevent dead- and livelocks. Second, we retain as much parallelism as possible by allowing dataparallel threads to work on disjoint areas of a data structure concurrently. For example, think of threads that modify disjoint locations of a graph without affecting its structural integrity. In our approach, a programmer can provide rules that describe the conditions under which a parallel access is allowed. At runtime, we check these rules and determine how many threads can run in parallel.We currently extend the above synchronisation mechanism with a scheduler that redistributes conflicting data access so that the SIMD execution on a GPU causes less serialization than without the re-ordering. Hence, the degree of parallelism grows. The underlying idea exploits that GPUs organize threads in hierarchical units. If the above synchronization mechanism detects a conflicting access in one of these units, it checks on the next smaller unit whether the conflict can also be found there. If this is not the case, the fewer threads in that unit can run in parallel. This is much better than serializing all threads in the enclosing unit. In this situation it is the scheduler’s task to re-distribute the detected collisions across the units so that as many threads as possible can run in parallel. As the scheduling is performed at run-time it needs to be efficient, must itself run in parallel, and potentially make use of the dynamic thread creation capabilities of modern GPUs. Graphs are fundamental data structures to represent relations between data (e.g., social networks, web link analysis). Graphs can have millions/billions of vertices/edges. GPUs can process graphs with 1000th of threads in parallel very efficiently. Graph Analyses use the Bulk Synchronous Parallel Model (BSP) which divides the analysis into three strictly separated phases: computation, communication and synchronization. The two latter ones require communications with the host system (CPU) that slow down execution. Our GPU-based compiler works after the BSP model too. Internally the code is represented as (control flow-) graph. This graph is transferred to the GPU and gets analyzed. Every code modification triggers this cycle. The Graph has thus to be generated and transferred to the GPU very fast.

Publications in the field of graph-analysis focus on optimizing the computation time. The end-to-end execution time (including communication and synchronization) is ignored but has a strong impact on the run-time. Our compiler considers every phase of the BSP. In 2017 we published a paper that significantly reduces the time for synchronization.

In addition, we focus on speeding up of the communication phase of the BSP model. Communication here means the transfer of the graph in both directions (GPU Host). While the graph and data structure used has strong impact on the run-time behavior it also influences the computation phase. Since there is no publication in the literature that systematically investigates the impact of the data-structure on the end-to-end run-time of a GPU graph analysis, we implemented a number of benchmarks that use different attributes of graphs (e.g., access successor/predecessor, random node access) and eight different graph data structures to represent graphs on the GPU. For the measurements we used a number of structurally different graphs. The results are likely to help developers in picking the right graph data structure for their GPU-problem. In 2018 we completed our comparative study on the efficiency of graph data structures on GPUs. To show the effectiveness of our framework we integrated it into the LLVM compiler framework. We picked four LLVM analyses and parallelized them with ParCAn. Ample measurements show that our framework can accelerate LLVM’s compilation process by up to 40%. A publication was accepted at the 28th International Conference on Compiler Construction and will receive the Best-Paper-Award.

In 2019, ParCAn was adjusted to the new execution model of NVIDIA’s latest GPU architectures. With the introduction of the Volta architecture, threads can now achieve progress independently of the others. Since Volta every thread has its own program counter and call stack. Previously, a group of threads (called a warp) shared both a common program counter as well as a call stack. The threads either executed the same instruction or were idle (lock-step execution). Applications that are not adjusted to this execution model will compute wrong results. As threads now execute independently of each other, race conditions can occur within warps. Older lock-step fashioned execution models inserted synchronization points to prevent this implicitly. We inspected ParCAn’s source code for code fragments susceptible to causing race conditions on new architectures. These fragments were adjusted to now execute properly on the latest NVIDIA architectures.

In 2020, we successfully completed this research project. We demonstrated that parallelizing the particularly cost-intensive data flow analyses can speed-up the compilation process of up to 31%. Thus, our research leads the way towards parallelized compilers that meet the requirements of today's software projects. The importance of this research topic was underlined by a "Best Paper Award" at the renowned "Compiler Construction" conference, see references.

The use of the GPU as the target architecture raised other research-related questions that were also published. Publications:

|

|

ParSeMiS - the Parallel and Sequential Graph Mining Suite(Own Funds) Project leader: Abstract:The ParSeMiS project (Parallel and Sequential Graph Mining Suite) searches for frequent, interesting substructures in graph databases. This task is becoming increasingly popular because science and commerce need to detect, store, and process complex relations in huge graph structures.

In 2008, the following goals have been achieved:

In 2009, the following goals have been attacked:

In 2010, the distributed stack implementations have also been tested on other algorithms and data structures.

|

| PARSEMIS auf Github |

|

Parallelization techniques for embedded systems in automation(Own Funds) Project leader: Abstract:This project was launched in 2009 to address the refactorization and parallelization of applications used in the field of industry automation. These programs are executed on specially designed embedded systems. This hardware forms an industry standard and is used worldwide. As multicore-architectures are increasingly used in embedded systems, existing sequential software must be parallelized for these new architectures in order to improve performance. As these programs are typically used in the industrial domain for the control of processes and factory automation they have a long life cycle. Because of this, the programs often are not being maintained by their original developers any more. Besides that, a lot of effort was spent to guarantee that the programs work reliably. For these reasons the software is only extended in a very reluctant way. Therefore, a migration of these legacy applications to new hardware and a parallelization cannot be done manually, as it is too error prone. Thus, we need tools that perform these tasks automatically or aid the developer with the migration and parallelization. Research on parallelization techniques We developed a special compiler for the parallelization of existing automation programs. First, we examined automation applications with respect to automatic parallelizability. We found that it is hard to perform an efficient automatic parallelization with existing techniques. Therefore this part of the project focuses on two steps to handle this situation. As first step, we developed a data dependence analysis that identifies potential critical sections in a parallel program, presents them to the programmer and adds their protection to the code. We ware able to show that our approach to identify critical sections finds atomic blocks that closely match the atomic blocks that an expert would add to the code. Besides that, we showed in 2014 that the impacts on execution times are negligible if our technique finds atomic blocks that are larger than necessary or that are not necessary at all. As second step we have refined and enhanced existing techniques (software transactional memory (STM) and lock inference) to implement atomic blocks. In our approach, an atomic block uses STM as long as lock inference would lead to coarse-grained synchronization. The atomic block switches from STM to lock inference as soon as a fine-grained synchronization is possible. With this technique, an atomic block always uses fine-grained synchronization while the runtime overhead of STM is minimized at the same time. We showed that (compared to a pure STM or lock inference implementation) our technique speeds up execution times by a factor between 1.1 and 6.3. Although fine-grained synchronization in general leads to better performance than a coarse-grained solution, there are cases where a coarse-grained implementation shows equal performance. We therefore presented a runtime mechanism for an STM that also works together with our combined technique. This runtime mechanism starts with a small number of locks, i.e., a coarse-grained locking, where accesses to different shared variables are protected by the same lock. If this coarse-grained locking leads to too many non-conflicting accesses waiting for the same lock, our approach gradually increases the number of locks. This makes the locking more fine-grained so that non-conflicting accesses can be executed concurrently. Our runtime mechanism that dynamically tunes the locking-granularity makes the programs run up to 3.0 times faster than a fixed coarsegrained synchronization. We completed this project part in 2014. Research on migration techniques Our research on the migration of legacy applications originally consisted of having a tool that automatically replaces suboptimal code constructs with better code. The code sequences that had to be replaced as well as the replacement codes were specified by developers by means of a newly developed pattern description language. However, we found this approach to be too difficult for novice developers. This led us to the development of a new tool that automatically learns and generalizes patterns from source code archives, recognizes them in other projects, and presents recommendations to developers. The foundation of our tool lies in the comparison of two versions of the same program. It extracts the changes that were made between two source codes, derives generalized patterns of suboptimal and better code from these changes, and saves the patterns in a database. Our tool then uses these patterns to suggest similar changes for the source code of different programs. In 2014 we developed a new symbolic code execution engine to minimize the number of wrong recommendations. Depending on the number and the generality of the patterns in the database, it is possible that without the new engine our tool generates some unfitting recommendations. To discard the unfitting ones, we compare the summary of the semantics/behavior of the recommendation with summary of the semantics/behavior of the database pattern. If both differ too severely, our tool drops the recommendation from the results. The distinctive features of our approach are its applicability to isolated code fragments and its automatic configuration that does not require any human interaction. The latest results of our tool SIFE are found online (last update: 2014-05-09). Parts of the project are funded by the "ESI-Anwendungszentrum" [http://www.esi-anwendungszentrum.de/] |

|

Recurrent Neuronal Networks (RNNs) for Real-Time Estimation of Nonlinear Motion Models(Third Party Funds Single) Project leader: Abstract:

With the growing availability of information about an environment (e.g., the geometry of a gymnasium) and about the objects therein (e.g., athletes in the gymnasium), there is an increasing interest in bringing that information together profitably (so-called information fusion) and in processing that information. For example, one would like to reconstruct physically correct animations (e.g., in virtual reality, VR) of complex and highly dynamic movements (e.g., in sports situations) in real-time. Likewise, e.g., manufacturing plants of the industry, which suffer from unfavorable environmental conditions (e.g., magnetic field interference or missing GPS signal), benefit from, e.g., high-precision goods location. Typically, to describe movements, one uses either poses that describe a "snapshot" of a state of motion (e.g., idle state, stoppage), or a motion model that describes movement over time (e.g., walking or running). In addition, human movements may be identified, detected, and sensed by different sensors (e.g., on the body) and mapped in the form of poses and motion models. Different types of modern sensors (e.g., camera, radio, and inertial sensors) provide information of varying quality.

In principle, with the help of expensive and highly precise measuring instruments, the extraction of the poses and resp. of the motion model, for example, from positions on small tracking areas is possible without errors. Positions, e.g., of human extremities, can describe or be described by poses and motion models. Camera-based sensors deliver the required high-frequency and high-precision reference measurements on small areas. However, as the size of the tracking surface increases, the usability of camera-based systems decreases (due to inaccuracies or occlusion issues). Likewise, on large areas radio and inertial sensors only provide noisy and inaccurate measurements. Although a combination of radio and inertial sensors based on Bayesian filters achieves greater accuracy, it is still inadequate to precisely sense human motion on large areas, e.g., in sports, as human movement changes abruptly and rapidly. Thus, the resulting motion models are inaccurate.

Furthermore, every human movement is highly nonlinear (or unpredictable). We cannot map this nonlinearity correctly with today's motion models. Bayes filters describe these models but these (statistical) methods break down a nonlinear problem into linear subproblems, which in turn cannot physically represent the motion. In addition, current methods produce high latency when they require accuracy.

Due to these three problems (inaccurate position data on large areas, nonlinearity, and latency), today's methods are unusable, e.g., for sports applications that require short response times. This project aims to counteract these nonlinearities by using machine learning methods. The project includes research on recurrent neural networks (RNN) to create nonlinear motion models. As modern Bayesian filtering methods (e.g., Kalman and Particle filters) and other statistical methods can only describe the linear portions of nonlinear human movements (e.g., the relative position of the head w.r.t. trunk while walking or running) they are thus physically not completely correct.

Therefore, the main goal is to evaluate how machine learning methods can describe complex and nonlinear movements. We therefore examined whether RNNs describe the movements of an object physically correctly and support or replace previous methods. As part of a large-scale parameter study, we simulated physically correct movements and optimized RNN procedures on these simulations. We successfully showed that, with the help of suitable training methods, RNN models can either learn physical relationships or shapes of movement.

This project addresses three key topics:

I. A basic implementation investigates how and why methods of machine learning can be used to determine models of human movement.

In 2018, we first established a deeper understanding of the initial situation and problem definition. With the help of different basic implementations (different motion models) we investigated (1) how different movements (e.g., humans: walk, run, slalom; vehicles: meander, zig-zag) affect measurement inaccuracies of different sensor families, (2) how measurement inaccuracies of different sensor families (e.g., visible orientation errors, audible noise, and deliberated artificial errors) affect human motion, and (3) how different filter methods for error correction (that balance accuracy and latency) affect both motion and sensing. In addition, we showed (4) how measurement inaccuracies (due to the use of current Bayesian filtering techniques) correlate nonlinearly with human posture (e.g., gait apparatus) and predictably affect health (simulator sickness) through machine learning.

We studied methods of machine and deep learning for motion detection (humans: head, body, upper and lower extremity; vehicles: single- and bi-axial) and motion reconstruction (5) based on inertial, camera, and radio sensors, as well as various methods for feature extraction (e.g., SVM, DT, k-NN, VAE, 2D-CNN, 3D-CNN, RNN, LSTM, M/GRU). These were interconnected into different hybrid filter models to enrich extracted features with temporal and context-sensitive motion information, potentially creating more accurate, robust, and close to real-time motion models. In this way, these mechanics learned (6) motion models for multi-axis vehicles (e.g., forklifts) based on inertial, radio, and camera data, which generalize for different environments or tracking surfaces (with varying size, shape, and sensory structure, e.g., magnetic field, multipath, texturing, and illumination). Furthermore (7), we gained a deeper understanding of the effects of non-constant accelerated motion models on radio signals. On the basis of these findings, we trained an LSTM model that predicts different movement speeds and motion forms of a single-axis robot (i.e., Segway) close to real-time and more accurately than conventional methods.

In 2019, we found that these models can also predict human movement (human movement model). We also determined that the LSTM models can either be fully self-sufficient at runtime or integrated as support points into localization estimates, e.g., into Pedestrian Dead Reckoning (PDR) methods.

II. Based on this, we try to find ways to optimize the basic implementation in terms of robustness, latency, and reusability.

In 2018, we used the findings from I. (1-7) to stabilize so-called (1) relative Pedestrian Dead Reckoning (PDR) methods using motion classifiers. These enable a generalization to any environment. A deeper radio signal understanding (2) allowed to learn long-term errors in RNN-based motion models. This improves the position accuracy, stability, and a near real-time prediction. First experiments showed the robustness of the movement models (3) with the help of different real (unknown to the models) movement trajectories for one- and two-axis vehicles. Furthermore, we investigated (4) how hybrid filter models (e.g., interconnection of feature extractors such as 2D/3D-CNNs and time-series trackers such as RNNs-LSTM) provide more accurate, more stable, and filtered (outlier-corrected) results.

In 2019, we showed that models of the RNN family extrapolate movements into the future so that they compensate for the latency of the processing pipeline and beyond. Furthermore, we examined the explainability, interpretability, and robustness of the models examined here, and their reusability on the human movement.

With the help of a simulator, we generated physically correct movements, e.g., positions of pedestrians, cyclists, cars, and planes. Based on this data, we showed that RNN models can interpolate between different types of movement and can compensate for missing data points, interpret white and random noise as such, and can extrapolate movements. The latter enables processing-specific latency to be compensated and enables human movement to be predicted from radio and inertial data in real time.

Novel RNN architecture. Furthermore, in 2019, we researched a new architecture, or topology, of a neural network, that balances the strengths and weaknesses of flat neural networks (NN) and recurrent networks. We found this optimal NN for determining physically correct movement in a large-scale parameter study. In particular, we also optimized the model architecture and parameters for human-centered localization. These optimal architectures predict human movement far into the future from as little sensor information as possible. The architecture with the least localization error combines two DNNs with an RNN.

Interpretability of models. In 2019, we examined the functionality of this new model. For this purpose, we researched a new process pipeline for the interpretation and explanation of the model. The pipeline uses the mutual information flow and the mutual transfer entropy in combination with various targeted manipulations of the hidden states and suitable visualization techniques to describe the state of the model at any time, both subjectively and objectively. In addition, we adapted a variational auto-encoder (VAE) to better visualize and interpret extracted features of a neural network. We designed and parameterized the VAE such that the reconstruction error of the signal is within the range of the measurement noise and at the same time forced the model to store disentangled features in its latent space. This disentanglement enabled the first subjective statements about the interrelationships of the features that are really necessary to optimally code the channel state of a radio signal.

Compression. In 2019, we discovered a side effect of the VAE that offers the possibility of decentralized preprocessing of the channel information directly on the antenna. This compression then reduces the data traffic, lowers the communication load, and thus increases the number of possible participants in the communication and localization in a closed sensor network.

Influence of the variation of the input information. In 2019, we also examined how changes in the input sequence length of a recurrent neural network affect the learning success and the type of results of the model. We discovered that a longer sequence persuades the model to be a motion model, i.e., to learn the form of movement, while with shorter sequences the model tends to learn physical relationships. The optimal balance between short and long sequences represents the highest accuracy.

We investigated speed estimation using the new method. When used in a PDR model, this increased the position accuracy. An initial work in 2019 has examined in detail which methods are best suited to estimate the speed of human movement from a raw inertial signal. A new process, a combination of a one-dimensional CNN and a BLSTM, has replaced the state of the art.

In 2020, we optimized the architecture of the model,with regard to its prediction accuracy and investigated the effects of a deep fusion of Bayesian and DL methods on the prediction accuracy and robustness.

Optimization. In 2020, we improved the existing CNN and RNN architecture and proposed the fusion of ResNet and BLSTM. We replaced the CNN with a residual network to extract deeper and higher quality features from a continuous data stream. We showed that this architecture entails higher computing costs, but surpasses the accuracy of the state-of-the-art. In addition, the RNN architecture can be scaled down to counter the blurring of the context vector of the LSTM cells with very long input sequences, as the remaining ResNet network offers more qualitative features.

Deep Bayesian Method. In 2020 we investigated whether methods of the RNN family can extract certain movement properties from recorded movement data to replace the measurement-, process-, and transition-noise distributions of a Kalman filter (KF). We showed that highly optimized LSTM cells can reconstruct trajectories more robust (low error variance) and more precise (positional accuracy) than an equally highly optimized KF. The deep coupling of LSTM in KF, so-called Deep Bayes, provided the most robust and precise positions and trajectories. This study also showed that methods trained on realistic synthetic data, the Deep Bayesian method, needed the least real data to adapt to a new unknown domain, e.g., unknown motion shapes and velocity distribution.

III. Finally, a demonstration of feasibility shall be tested.

In 2018, a large-scale social science study opened the world's largest virtual dinosaur museum and showed that (1) a pre-selected (application-optimized) model of human movement robustly and accurately (i.e., without a significant impact on simulator sickness) maps human motion, resp. predicts it. We used this as a basis for comparison tests with other models that are human-centered and generalize to different environments.

In 2019, we developed two new live demonstrators that are based on the research results achieved in I and II. (1) A model railway that crosses a landscape with a tunnel at variable speeds. The tunnel represents realistic and typical environmental characteristics that lead to nonlinear multipath propagation of a radio transmitter to be located and ultimately to an incorrectly determined position. This demonstrator shows that the RNN methods researched as part of the research project can localize highly precisely and robustly, both on complex channel impulse responses and on dimensionally reduced response times, and also deliver better results than conventional Kalman filters. (2) We used the second demonstrator to visualize the movement of a person's upper extremity. We recorded human movement using inexpensive inertial sensors attached to both arm joints, classified using machine-based and deep learning, and derived motion parameters. A graphic user interface visualizes the movement and the derived parameters in near real time.

The planned generalizability, e.g., of human-centered models and the applicability of RNN-based methods in different environments, has been demonstrated using (1) and (2).

In 2019, we applied the proposed methods in the following applications:

Application: Radio Signal. We classified the channel information of a radio system hierarchically. We translated the localization problem of a Line of Sight (LoS) and Non Line of Sight (NLoS) classifier into a binary problem. Hence, we now can precisely localize a position within a meter, based on individual channel information from a single antenna if the environment provides heterogeneous channel propagation.

Furthermore, we simulated LoS and NLoS channel information and used it to interpolate between different channels. This enables the providers of radio systems to respond to changing or new environments in the channel information a-priori in the simulation. By selectively retraining the models with the simulated knowledge, we enabled more robust models.